The Role of GPUs in Data Center AI Workloads

21 September 2025

Let’s take a moment and picture this: you're watching your favorite sci-fi flick, where an advanced AI runs everything—from self-driving cars to sophisticated robots. While it may seem like pure movie magic, there’s some real tech wizardry happening behind the scenes in the real world. And the not-so-secret sauce that makes these artificial intelligences tick? GPUs.

Yep, those mighty Graphics Processing Units (GPUs) aren’t just for gaming anymore. They’ve taken center stage in powering the ever-growing demands of AI workloads in modern-day data centers. Buckle up, because we’re diving into how and why GPUs have become the MVPs in the world of AI.

Wait, GPUs in Data Centers? Aren’t Those for Gamers?

Totally valid question. Traditionally, GPUs were the go-to for rendering mind-blowing graphics in video games. They handled complex image processing way faster than CPUs, which made them ideal for gaming setups.But a funny thing happened on the way to the arcade: researchers realized that the same math-heavy architecture that made GPUs awesome at graphics also made them incredible at handling AI tasks. We're talking about monster-level parallel processing power—think thousands of cores working simultaneously to crunch numbers at lightning speed.

So, while CPUs are like a brilliant student that does homework meticulously and one step at a time, GPUs are the class clown who somehow solves 100 math problems at once while juggling bananas. Impressive, right?

Why AI Loves GPUs: A Match Made in Silicon Heaven

Alright, let’s get into why GPUs and AI are like peanut butter and jelly (with none of the stickiness).1. Parallelism: AI’s Best Friend

AI, particularly deep learning, involves a metric ton of matrix math. We’re talking enormous neural networks, each with millions (even billions!) of parameters. Running these networks means doing the same computations over and over again—but with different data.Enter the GPU. With thousands of smaller cores, GPUs can process multiple operations at the same time. That's parallel processing in action. This is exactly what AI frameworks like TensorFlow and PyTorch thrive on.

2. Training vs. Inference: GPUs Excel at Both

Training an AI model is like teaching a dog new tricks—it takes time, repetition, and yes, a lot of data. GPUs accelerate this training process dramatically. What would take days or even weeks on a CPU can be done in hours on a high-end GPU.But wait, there’s more! Once the model is trained, it enters inference mode—basically, putting those learned tricks to use. GPUs handle inference like pros, too. Whether it’s voice recognition on your phone or real-time object detection in videos, GPUs make it swift and seamless.

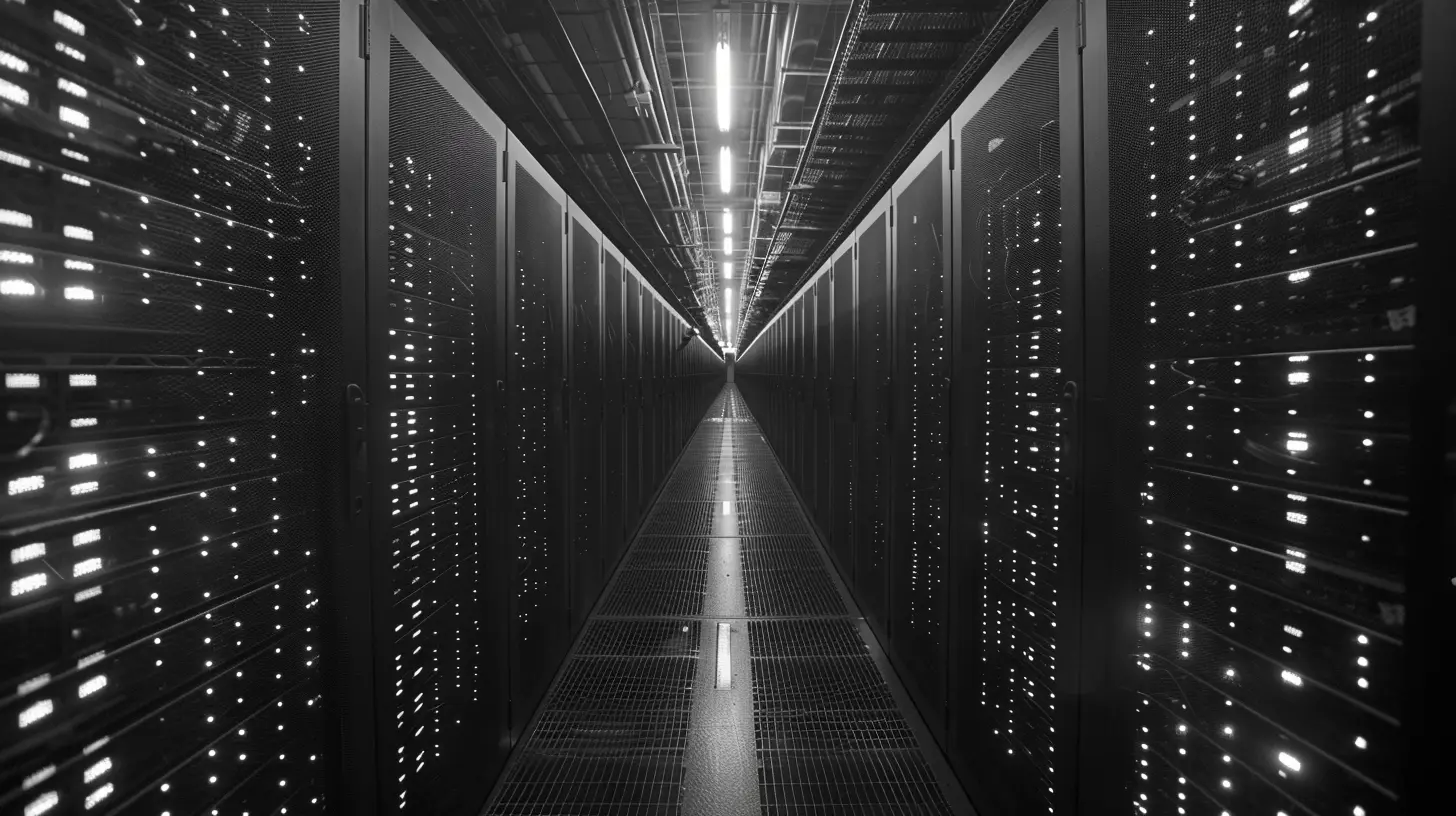

3. Scalability: Stack ‘Em Like Lego Bricks

Modern GPUs can be scaled up inside massive data centers. Think racks upon racks of GPUs working together like a well-oiled hive mind. With tech like NVIDIA's NVLink and PCIe Gen 5, data can be shared across GPUs at blazing speeds.Need more power? Just plug in more GPUs. It’s literally plug-and-play (okay, maybe not that simple, but you get the idea).

The Rise of the AI-Powered Data Center

Data centers have become the digital backbone of... well, pretty much everything. From social media algorithms to streaming recommendations and smart assistants, you're interacting with AI-powered services all the time. And the horsepower behind much of that AI comes from—you guessed it—GPUs.Let’s break down the transformation.

Traditional vs. AI-Driven Data Centers

In the past, data centers were primarily designed for storing data and running basic web applications. The emphasis was on CPUs, network switches, and cold storage.Now? Data centers need to handle AI workflows that demand tons of computing muscle. That means:

- High-density GPU servers

- Faster network interconnects

- Energy-optimized cooling systems

- AI-specific infrastructure like NVIDIA DGX or Google TPU clusters

Basically, today’s data centers are less like libraries and more like high-tech gyms for buffing up AI models.

Key Players: Who’s Leading the GPU-AI Revolution?

It wouldn’t be a tech blog without name-dropping some cool players in the field. So let’s tip our hats to the giants who are making GPU magic happen:NVIDIA: The OG AI Enabler

NVIDIA pretty much leads the pack. Their GPUs (like the A100 and H100 Tensor Core beasts) are in everything from research labs to Amazon Web Services. CUDA, NVIDIA’s proprietary parallel computing platform, is what makes their GPUs so AI-optimized.Bonus: They even have prebuilt systems like DGX for out-of-the-box AI training power.

AMD: Not Just a Gamer’s Choice

AMD isn’t sitting on the sidelines either. With its Radeon Instinct and MI series GPUs, AMD is aiming squarely at AI workloads. Plus, their open-source ROCm platform lets developers build without being locked into proprietary tools.Google TPUs: The Wild Card

Okay, not a GPU per se, but worth a mention. Google’s Tensor Processing Units (TPUs) are specially designed for AI and machine learning. They give GPUs a run for their money, especially in Google Cloud’s AI services.Real-Life Examples: GPU Power in Action

Need some real-world juice to back up the hype? Let’s look at what’s already happening out there.Self-Driving Cars (a.k.a., The Holy Grail of AI)

Each autonomous vehicle crunches data from dozens of sensors in real-time—cameras, radars, lidars, you name it. That’s a massive workload. But thanks to embedded GPUs like NVIDIA’s Drive AGX, these cars can make split-second decisions safely.Chatbots and Language Models

From ChatGPT to your bank’s virtual assistant, AI language models are getting more lifelike. These models are often trained on GPU clusters with hundreds (or thousands) of units. The result? Chatbots that can crack jokes, write poems, or help you reset your password without losing their digital cool.Healthcare and Drug Discovery

Yep, GPUs are being used to simulate protein folding and analyze X-rays. This speeds up drug discovery and helps doctors detect diseases early. Less time, more accuracy, better outcomes.How cool is that?

Challenges: It’s Not All Smooth Sailing

Now, before we crown GPUs the flawless AI kings, let’s talk about a few bumps on the road.Energy Consumption: Hot Hardware, Hotter Bills

GPUs are powerful, but they’re also power-hungry. Running a GPU data center is like lighting up a small town. That’s why companies are investing in green energy, better cooling systems, and more efficient chips.Supply Chain Issues

Remember the chip shortage drama? Yeah, GPUs weren’t spared. Demand for GPUs in gaming, crypto, and AI meant everyone was scrambling for limited supply. Things are getting better, but it’s still something to watch.Cost: Quality Comes at a Price

High-end AI GPUs can cost thousands of dollars each. Add in the racks, cooling, software licenses, and maintenance... you're looking at a serious investment. Thankfully, cloud providers offer GPU power on demand, making it more accessible.What’s Next? The Future of GPUs in AI Workloads

AI is moving fast—and GPUs are evolving to keep up. Expect to see:- Smarter GPUs with built-in AI accelerators and tensor cores

- Better software for managing, optimizing, and scaling GPU usage

- Hybrid architectures combining CPUs, GPUs, and custom chips like FPGAs and TPUs

- Quantum AI? Okay, we’re not quite there yet, but hey, a nerd can dream!

Companies are also developing domain-specific chips, so we might see specialized GPUs for tasks like NLP or medical imaging in the near future.

So, Are GPUs the Future of AI in Data Centers?

In a word: absolutely.GPUs have proven they’re not just a passing trend—they're a vital part of the AI ecosystem. Their ability to handle immense parallel workloads, adapt to different stages of AI (training and inference), and scale across data centers makes them indispensable.

Whether you're training a self-aware robot overlord (hopefully a friendly one) or just creating smarter chatbots, GPUs are the quiet workhorses making it all possible.

So next time you hear “GPU,” don’t just think gaming—think artificial intelligence, smart cities, personalized medicine, and a future powered by silicon brains.

Cool, huh?

all images in this post were generated using AI tools

Category:

Data CentersAuthor:

Gabriel Sullivan

Discussion

rate this article

1 comments

Cooper McGehee

Great insights! GPUs are indeed pivotal for enhancing efficiency in data center AI workloads.

October 1, 2025 at 11:48 AM

Gabriel Sullivan

Thank you! I'm glad you found the insights valuable. GPUs truly are game-changers for AI efficiency in data centers.